Jul 22, 2024

Database Block Buffer Cache- Memory Structures

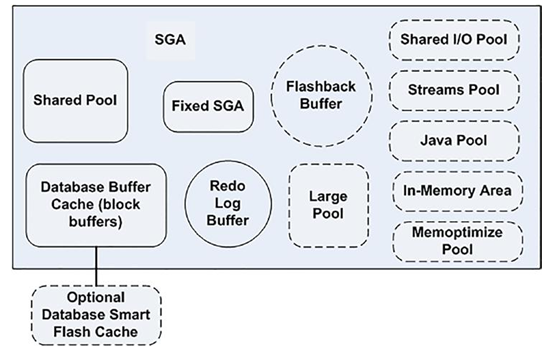

So far, we have looked at relatively small components of the SGA. Now we are going to look at one that is potentially huge in size. The block buffer cache is where Oracle stores database blocks before writing them to disk and after reading them in from disk.

This is a crucial area of the SGA for us. Make it too small and our queries will take forever to run. Make it too big and we’ll starve other processes (e.g., we won’t leave enough room for a dedicated server to create its PGA, and we won’t even get started).

We have three places to store cached blocks from individual segments in the SGA:

•\ Default pool: The location where all segment blocks are normally cached. This is the original—and, previously, the only—buffer pool.

•\ Keep pool: An alternate buffer pool where by convention you assign segments that are accessed fairly frequently, but still get aged out of the default buffer pool due to other segments needing space.

•\ Recycle pool: An alternate buffer pool where by convention you assign large segments that you access very randomly and which would therefore cause excessive buffer flushing of many blocks from many segments. There’s no benefit to caching such segments because by the time you wanted the block again, it would have been aged out of the cache. You would separate these segments out from the segments in the default and keep pools so they would not cause those blocks to age out of the cache.

Note that in the keep and recycle pool descriptions I used the phrase “by convention.” There is nothing in place to ensure that you use either the keep pool or the recycle pool in the fashion described. In fact, the three pools manage blocks in a mostly identical fashion; they do not have radically different algorithms for aging or caching blocks.

The goal here was to give the DBA the ability to segregate segments to hot, warm, and “do not care to cache” areas. The theory was that objects in the default pool would be hot enough (i.e., used enough) to warrant staying in the cache all by themselves.

The cache would keep them in memory since they were very popular blocks. If you had some segments that were fairly popular but not really hot, these would be considered the warm blocks. These segments’ blocks could get flushed from the cache to make room for blocks you used infrequently (the “do not care to cache” blocks).

To keep these warm segments’ blocks cached, you could do one of the following:

•\ Assign these segments to the keep pool, in an attempt to let the warm blocks stay in the buffer cache longer.

•\ Assign the “do not care to cache” segments to the recycle pool, keeping the recycle pool fairly small so as to let the blocks come into the cache and leave the cache rapidly (decrease the overhead of managing them all).

Having to do one of these two things increased the management work the DBA had to perform, as there were three caches to think about, size, and assign objects to. Remember also that there is no sharing among them, so if the keep pool has lots of unused space, it won’t give it to the overworked default or recycle pool.

All in all, these pools were generally regarded as a very fine, low-level tuning device, only to be used after most other tuning alternatives had been looked at (if I could rewrite a query to do one-tenth the I/O rather than set up multiple buffer pools, that would be my choice).

There are up to four more optional caches, the DB_nK_CACHE_SIZE, to consider in addition to the default, keep, and recycle pools. These caches were added in support of multiple block sizes in the database. A database can have a default block size, which is the size of the blocks stored in the default, keep, or recycle pool, as well as up to four nondefault block sizes, as explained in Chapter 3.

The blocks in these buffer caches are managed in the same way as the blocks in the original default pool—there are no special algorithm changes for them either. Let’s now move on to see how the blocks are managed in these pools.

More Details